Introduction

The realm of artificial intelligence (AI) has been continuously evolving, with Text- To- Speech Datasets technology standing as one of its most dynamic frontiers. This technology, which converts written text into spoken words, is not just a tool for creating more human-like digital assistants, but it also holds the key to numerous applications ranging from accessibility solutions for the visually impaired to interactive educational tools. The backbone of any successful TTS system lies in the quality and diversity of the datasets used in its training. In this blog, we will delve into some of the top text to speech datasets that are shaping the future of AI development.

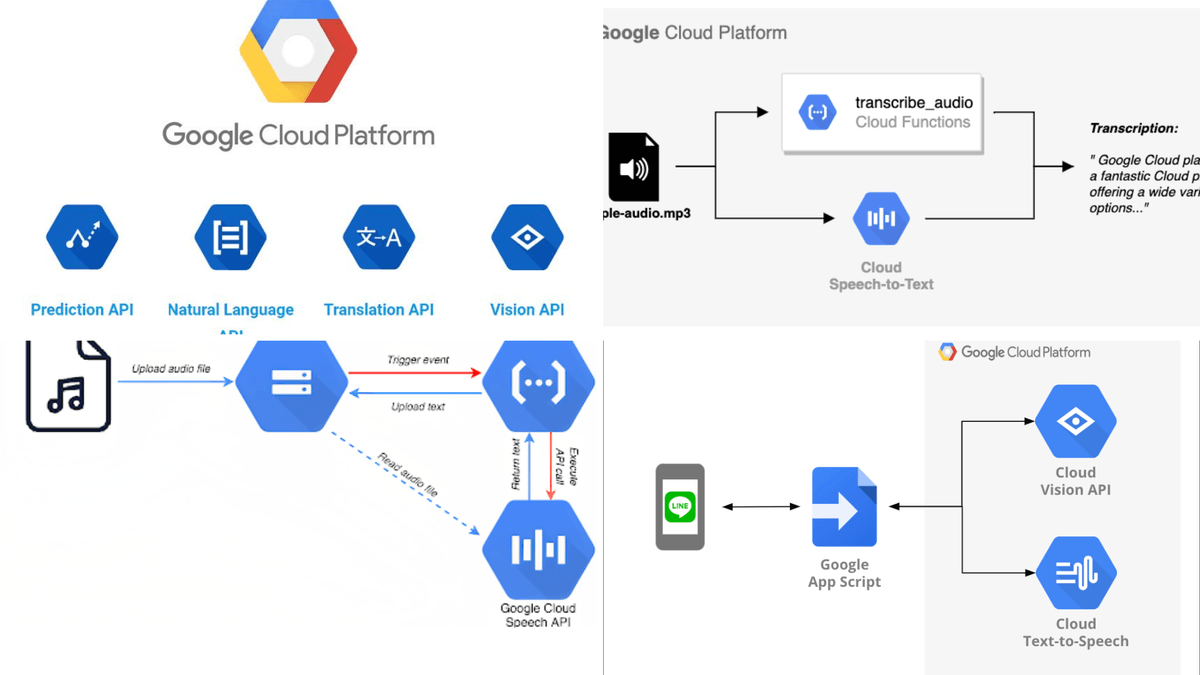

1. The Google Speech-to-Text Dataset

Google's Speech-to-Text dataset is a comprehensive collection used widely in the industry. It includes a vast array of languages and dialects, making it a versatile resource for developing multilingual TTS systems. What sets this dataset apart is its inclusion of various accents and speaking styles, providing a rich training ground for AI models to understand and replicate human speech more accurately.

2. The LibriSpeech Dataset

LibriSpeech is an open-source dataset derived from audiobooks that are part of the LibriVox project. It is particularly valuable for its clean, high-quality audio samples and the diversity of its spoken content. The dataset covers a range of English accents and is ideal for training models that require a broad understanding of English pronunciation.

3. The Mozilla Common Voice Project

Mozilla's Common Voice is an ambitious project aimed at making voice data more publicly accessible. What makes Common Voice unique is its crowd-sourced approach, allowing people from all over the world to contribute their voices. This dataset is continuously growing and is particularly useful for developing TTS systems that can cater to a wide variety of Text Data Collection languages and dialects.

4. VoxForge

VoxForge is another open-source platform, similar to Mozilla's Common Voice, which focuses on collecting speech samples from volunteers. It supports numerous languages and is constantly being updated with new contributions. This dataset is especially beneficial for AI projects that require a diverse set of voice samples.

5. TED-LIUM Corpus

The TED-LIUM dataset consists of transcriptions from TED Talks, providing a rich source of varied, sophisticated, and naturally spoken language. It's an excellent resource for training models to handle complex sentence structures and diverse vocabulary, which is typical in academic or professional settings.

6. The LJSpeech Dataset

The LJSpeech dataset is a high-quality single-speaker speech dataset suitable for training TTS models that require a consistent voice. It contains 13,100 short audio clips of a single female speaker, making it ideal for projects that need a uniform voice.

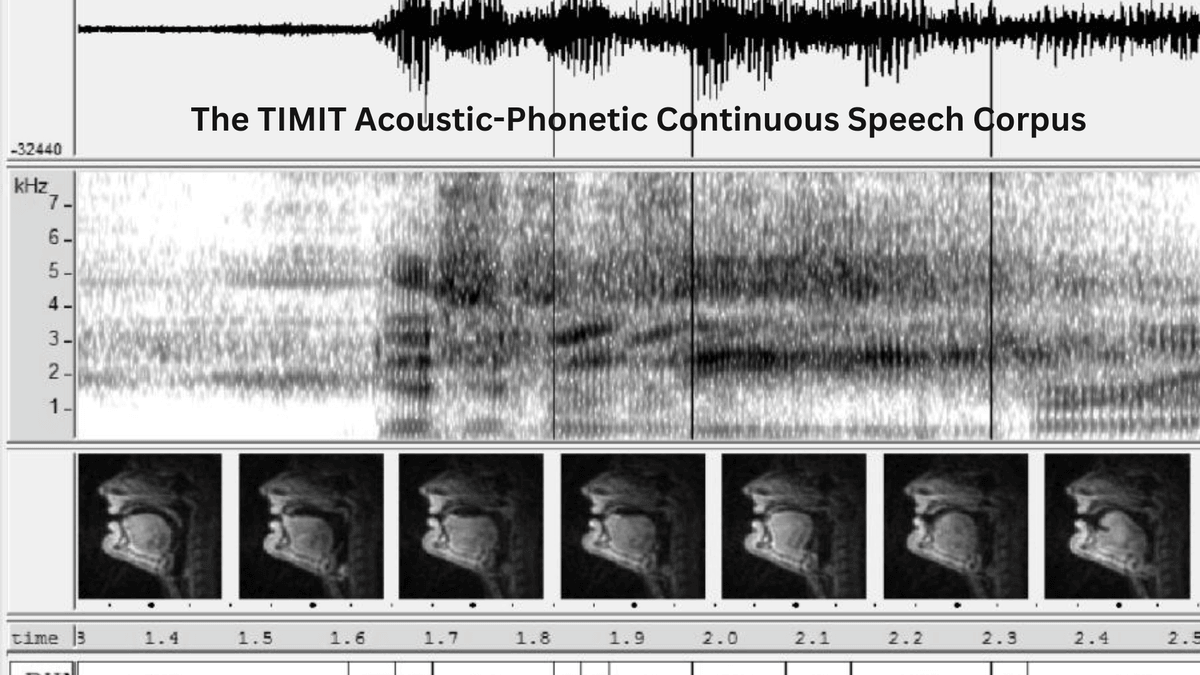

7. The TIMIT Acoustic-Phonetic Continuous Speech Corpus

TIMIT is one of the older datasets used in speech technology but remains relevant due to its detailed phonetic and dialectal annotations. This dataset is particularly useful for fine-tuning the phonetic aspects of speech in TTS systems.

8. M-AILABS Speech Dataset

The M-AILABS Speech Dataset is an extensive multi-language dataset, providing large volumes of data in several languages and dialects. This dataset is an excellent choice for developing TTS systems that need to operate in multiple linguistic contexts.

9. The Blizzard Challenge Datasets

The Blizzard Challenge, an annual competition, provides datasets each year for the development of TTS systems. These datasets vary annually, offering unique challenges and opportunities for developers to test and improve their systems.

10. The Spoken Wikipedia Corpora

This dataset consists of spoken articles from Wikipedia, offering a wide range of topics and speaking styles. It's an invaluable resource for training models that require a comprehensive understanding of academic and encyclopedic language.

The Importance of Diverse Datasets in TTS Development

The diversity of datasets in TTS development cannot be overstated. Different datasets offer various challenges and learning opportunities for AI models. For instance, datasets with multiple speakers in various languages and accents, like Google's Speech-to-Text and Mozilla's Common Voice, are crucial for creating inclusive and accessible TTS systems. On the other hand, single-speaker datasets like LJSpeech provide consistency, which is essential for certain applications like digital assistants or audiobook narration.

Moreover, the complexity of the content also plays a significant role. Datasets derived from professional or academic sources, such as the TED-LIUM Corpus or the Spoken Wikipedia, are invaluable for developing systems capable of handling complex language structures and specialized jargon.

Future Directions in TTS Dataset Development

As the demand for more advanced and natural-sounding TTS systems grows, so does the need for more comprehensive and diverse datasets. Future directions may involve the integration of emotional intonation and context-aware speech synthesis, requiring datasets with more nuanced voice recordings. Additionally, as voice technology becomes more embedded in our daily lives, the need for privacy-conscious dataset collection methods will also increase.

conclusion

the datasets we discussed are pivotal in advancing the field of TTS. They provide the foundational voice data necessary to train sophisticated AI models, enabling them to understand and replicate human speech in all its complexity and variety. As AI continues to evolve, the continuous expansion and enhancement of these datasets will be crucial in unlocking the full potential of voice technology.

Text-To-Speech Datasets With GTS Experts

In the captivating realm of AI, the auditory dimension is undergoing a profound transformation, thanks to Text-to-Speech technology. The pioneering work of companies like Globose Technology Solutions Pvt Ltd (GTS) in curating exceptional TTS datasets lays the foundation for groundbreaking auditory AI advancements. As we navigate a future where machines and humans communicate seamlessly, the role of TTS datasets in shaping this sonic learning journey is both pivotal and exhilarating.